What this blog covers:

- Snowflake offers scale-out capabilities to process more data at lower costs but compute costs still explode when data volumes grow unpredictably.

- Understand the key factors affecting Snowflake costs.

- How can Kyvos make it happen with a revolutionary Smart OLAPTM technology.

- Benchmarking numbers that prove Kyvos is 1695x more cost-effective and 600x faster than Snowflake.

When you go on a holiday, you rent a car and pay based on the travel distance to your destination. The more is the distance, the more is the cost. Same is the kinship between data and the cloud. In a world where data increases every minute and businesses thrive on data-driven insights, you need to explore more and more data to make intelligent business decisions. Since you can’t control the influx of data, optimizing cost is vital.

Cloud data warehouses like Snowflake offer relational, scale-out capabilities with the promise to make more data obtainable at a lower cost with fewer data management troubles. But the real concern starts when the size of data grows inevitably with time, leaving organizations with the baggage of unpredictable compute costs. Heavy analytics performed on massive amounts of data can make your bill heftier than usual, and at the end of each month, you will realize that you have paid a fortune for a workload that could be performed at a tremendously lower price.

The key question is – how do you control these costs? How do you make your data accessible to business users and analysts so that they can interact with it in a self-service way, without worrying about exploding compute costs? Is there a better way to achieve Snowflake cost optimization?

Let’s take the case where users want to perform analysis on 2 years of data – it could be billions or trillions of rows. They want to explore this data and not limit themselves in terms of the size, cardinality, or granularity and get quick answers to their business questions.

There should be an optimized way of organizing and interacting with data while keeping the costs under check.

Factors that Impact Snowflake Costs

Before we see how you can achieve Snowflake cost optimization, let us first take a look at some of the key factors that add to costs.

Resource-Intensive Queries

When users fire complex queries, costs are bound to escalate. For instance, if you make your data available to 1000 users and each of these users submits 10 ad hoc queries a day to the data warehouse, and the query they fire has multiple Joins or Group Bys and scans through billions or even trillions of rows at query time. In this case, every query is resource-intensive, and your register keeps ringing with each query fired to the data warehouse.

User Concurrency – More Users, More Queries

In time, as your business users grow, the number of queries submitted to your data warehouse grows too. Ultimately, you may have to deal with a situation where lots of users run heavy queries just because the data is available to them. All of this can lead to a significant rise in your Snowflake costs.

The Impact of Cold Queries on Snowflake Costs

Data warehouse platforms like Snowflake work on the partial caching mechanism for a frequently used set of data and preserve the results so that whenever you fire a query, the data is not moved from storage to compute every time you ask for it. Sure, it will save you some bucks, but when you fire a query for non-cached data, then it has to go through all the heavy lifting of reading the data from storage, and moving it to compute, leading to increased response time and costs.

Smart OLAP™ Technology to the Rescue

Kyvos helps you cut down querying costs on Snowflake through its cloud-native Smart OLAP™ technology. It reads data from Snowflake and builds pre-aggregated OLAP cubes that are stored in the cloud itself. The best thing is that these aggregations can be performed on extremely large datasets, regardless of the number of dimensions, measures, or granularity. Now, when a query is fired, it is served directly from the cube. This reduces compute costs significantly and the queries return fast too!

Optimize Resource Utilization with Build-Once-Query-Multiple-Times Approach

Once the cube is built, minimal resources are consumed per query enabling users to run unlimited queries on a massive amount of data without incurring any additional cost. Your query doesn’t go through a huge amount of heavy processing at query time because all the heavy lifting has been done. As all the combinations have already been calculated and the aggregations are already available, your query becomes lightweight. This gives you the benefit of scaling in terms of the number of users and queries fired concurrently. Our build-once-query-multiple-times approach enables Snowflake cost optimization while ensuring that any number of users can fire any number of queries.

Reduce Snowflake Costs Further with Elastic Architecture

Another key advantage is that you can scale up or down the querying capability through our scheduled cluster scaling feature that allows you to increase or decrease your query engines depending on the load. This way, you can optimize the resource utilization and pay only for the resources you use.

Save Costs on Ad Hoc Analysis

Enterprises often have to deal with cost escalations while performing ad hoc analysis. If you run the same query frequently then a lot of different caching mechanisms can retrieve the result without affecting your compute cost. But what if you try to run cold queries with different combinations. You need to scan your data multiple times and bear the cost of excessive processing at query time.

If you want to learn more on how Kyvos reduces BI costs on Snowflake Download our Ebook.

Benchmarking Results: Performance + Costs

Our Smart OLAP™ technology delivers unmatched BI performance through its BI acceleration layer on modern data platforms and provides limitless scalability for thousands of users across the enterprise.

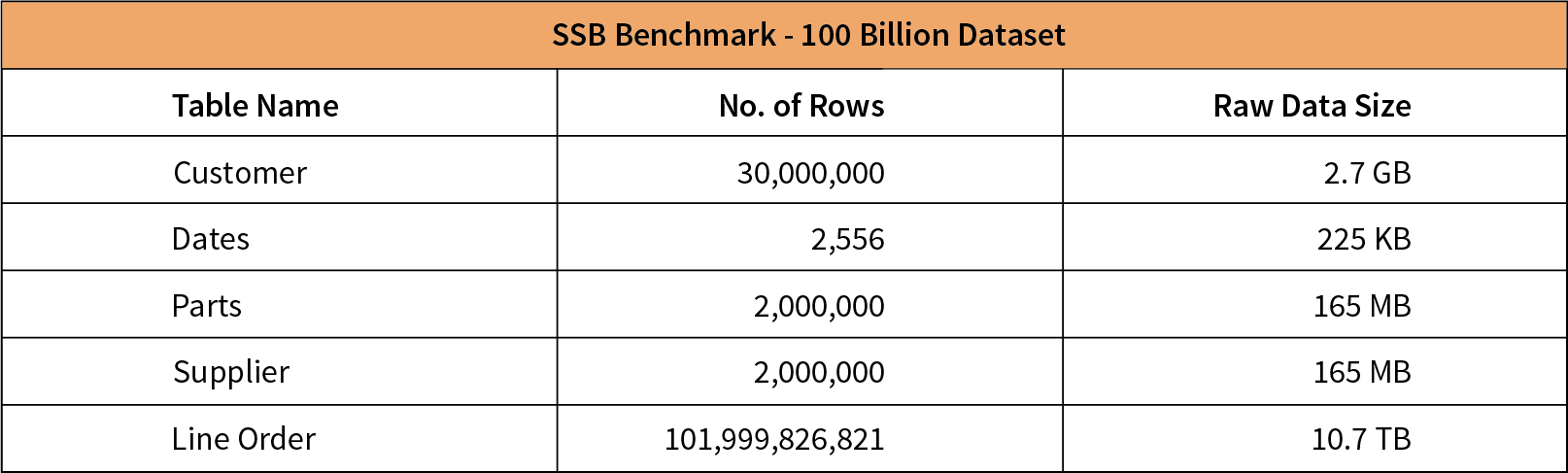

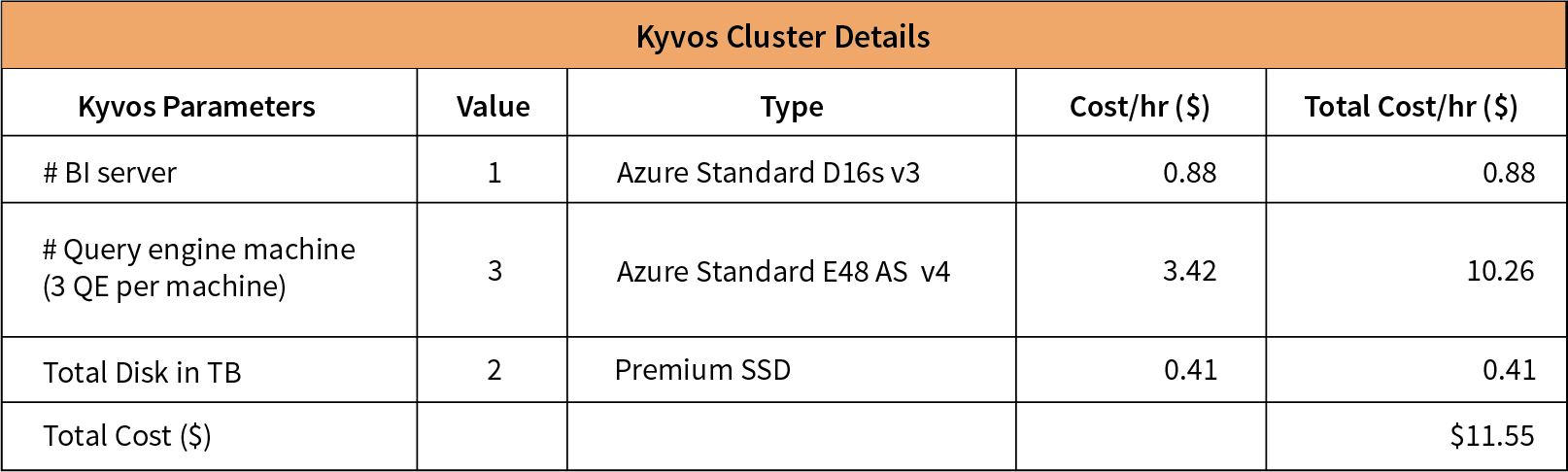

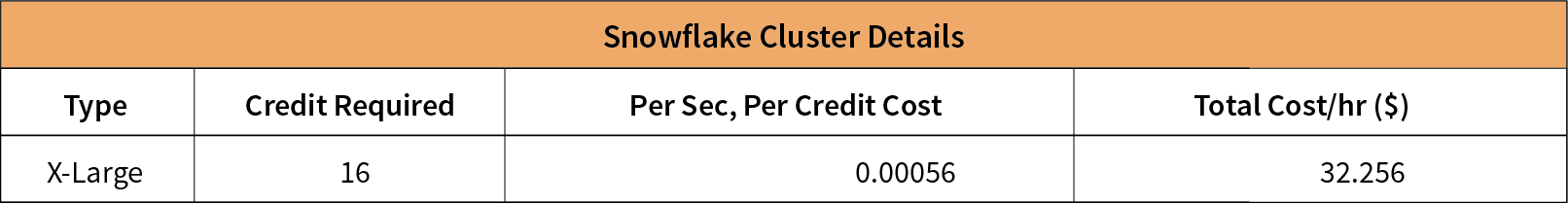

In a recent benchmarking study, we conducted a series of performance tests to analyze response time and cost for Star Schema Benchmark (SSB) queries on both Kyvos and Snowflake. These tests were carried out on the SSB dataset available in the public domain. We used thirteen queries for answering business problems. These queries were executed across large tables, including a 100 billion row fact table and a high-cardinality dimension.

Table 1: Benchmarking Dataset and Cluster Details

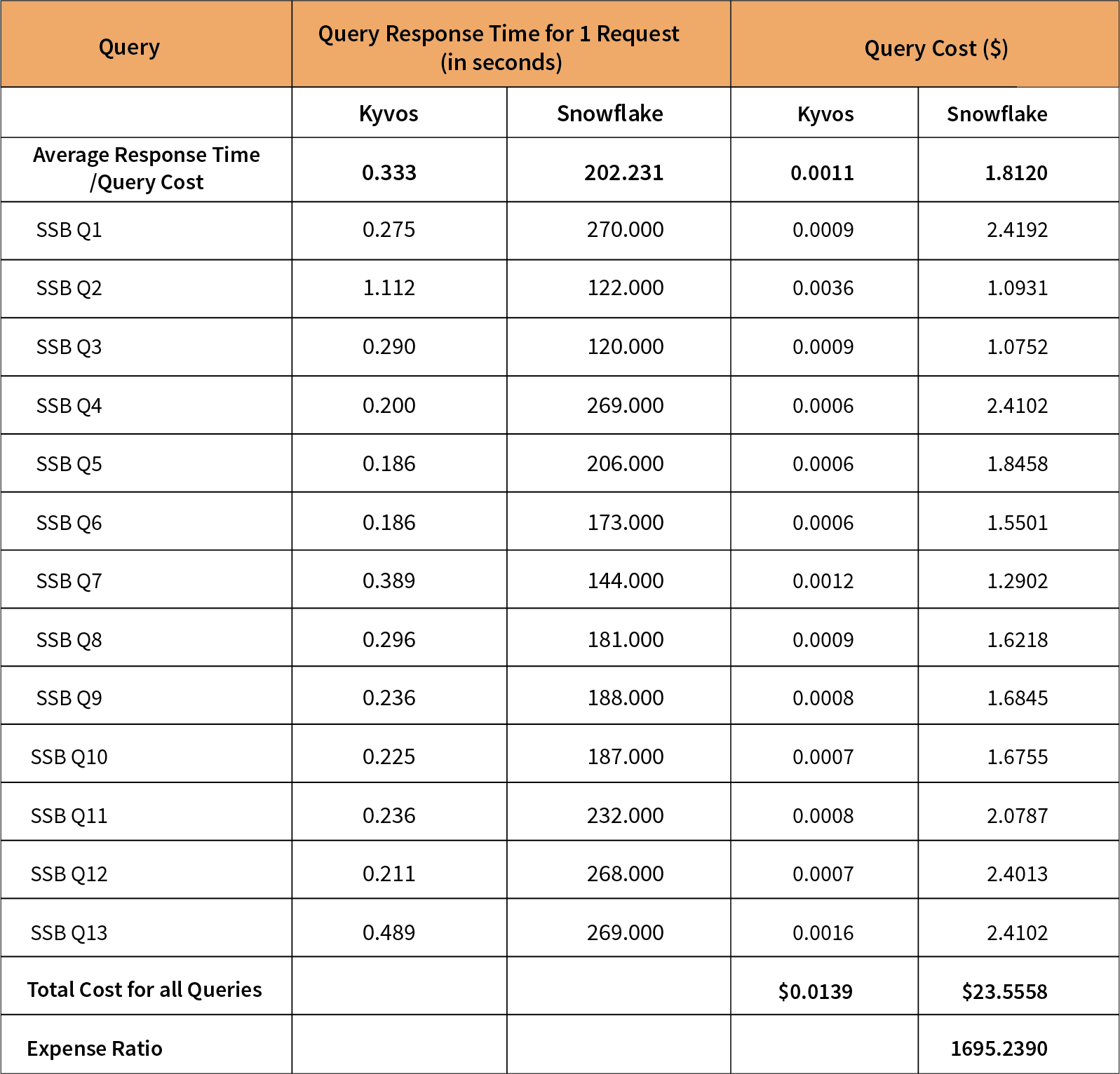

The table below shows the comparison between Kyvos and Snowflake costs for a single request.

Table 2: Kyvos and Snowflake Cost and Performance Comparisons

This shows:

- Kyvos is 1695x more cost-effective than Snowflake in this scenario.

- Kyvos provides 600x faster query performance than sending the queries directly to Snowflake.

The cost difference become more significant as concurrency increases. When the concurrency was increased to 25, Kyvos was 3914x more cost-effective than Snowflake.

Note: The above cost comparisons do not include cube building and software licensing costs. For details on the total cost of ownership, get in touch with us now.

Snowflake Cost Optimization with Kyvos

In the new era of data warehousing technologies, most businesses are already in the cloud or making their way towards it. With the volume and velocity at which their data is landing, it’s imperative for businesses to take account of this data and use it for competitive advantage, while at the same time ensuring that they are optimizing their BI spend.

With the right solution like Kyvos in your architecture, you can eliminate a lot of Snowflake costs while improving overall performance, concurrency, and cost predictability.

If you want to learn more about how Kyvos enables Snowflake cost optimization, request a demo now.

FAQ

How can I reduce costs while using Snowflake as my data warehouse?

Use Kyvos to cut down querying costs on Snowflake through a Kyvos’ patented algorithms that reads data from Snowflake and builds pre-processed semantic models stored in the cloud itself, even on extremely large datasets, regardless of the number of dimensions, measures, or granularity. When a query is fired, it is served directly from the semantic models, reducing compute costs significantly.

What are the best practices for optimizing the Snowflake warehouse costs?

Organizations can optimize their Snowflake warehouse cost by investing in an elastic analytics architecture that allows heavy pre-processing to make queries lightweight. A scalable infrastructure allows any number of users to fire queries at once without breaking speed or performance. Kyvos offers all this and more with its patented algorithms that has a proven track record.

How can I minimize unnecessary data storage costs in Snowflake?

Using Kyvos, organizations can minimize their data storage costs in Snowflake. The platform helps pre-process data models to create semantic models for returning queries in sub-seconds. The models are stored on the cloud, and queries are fetched directly from the cube, reducing the storage requirements.

Are there any recommended approaches for optimizing query performance and reducing costs in Snowflake?

Using smart pre-processing can help optimize query performance and reduce Snowflake costs. Our benchmarking report says that Kyvos is 1695x more cost-effective than Snowflake itself for running 13 SSB queries across a 100-billion row fact table.